Sorting Algorithms with CUDA!

Building on my previous post on sorting algorithms, I implemented the same algorithms using CUDA to explore performance improvements through parallel computing. The goal is to see how we can leverage the power of parallel computing to speed up our sorting algorithms. I went for a NVIDIA recruiting event some days ago, that was a great event and it motivated me to try to rewrite the sorting algorithms using CUDA.

I’ll take merge sort as our test algorithm because it nicely divides the problem into smaller subproblems with two equal halves, which is a good fit for parallel computing.

Basic Recursive Top Down Merge Sort

Below is the basic top down merge sort logic, where I recursively divide the array into two halves until I reach the base case of a single element, and then merge the sorted halves back together.

To merge two sorted arrays, we compare their starting elements, pick the smaller one for the output array, and move the pointers forward.

MERGE_SORT(arr, left, right)

IF left < right THEN

mid ← left + (right - left) / 2

// Recursively sort first half

MERGE_SORT(arr, left, mid)

// Recursively sort second half

MERGE_SORT(arr, mid + 1, right)

// Merge the sorted halves

MERGE(arr, left, mid, right)

ENDIF

END MERGE_SORT

Now let’s look at the CPU implementation which is linked below:

Code: Basic Recursive Merge Sort on CPU

Notes:

- Function Signatures:

-

void merge(uint8_t* arr, uint8_t* temp, long long left, long long mid, long long right):-

uint8_tinstead ofintfor the array elements is done to keep values small(0-255) -

long longfor the indices allows for very large arrays(10^18) -

uint8_t* tempacts as scratch space for merge operation and give performance improvements

-

-

void mergeSort(uint8_t* arr, uint8_t* temp, long long left, long long right)follows pseudo code which divides the array into two halves and calls itself on those two halves. When it reaches the base case of a single element, it calls the merge function to merge the two halves back together.

-

- GPU vs CPU sorting:

- Arrays are generated with passed arguments with specific seed(i.e. 1)

- All implementations do nearly the same amount of work

- Merge sort result needs to be called back to the original array from GPU to CPU which is an overhead and compared with sorted array using

std::sortfrom CPU - A better comparison could be sorting random arrays on the GPU itself and comparing the results

- How we do sorting and where we do sorting matters a lot depending on the larger context of the use

-

Wall Clock timeto run the whole program is used for the plots and not just the time taken to sort the array -

Correctness checkingis done by sorting the original array by using thestd::sortand compare the results - Time Complexity with Average Case: O(n log n)

- Space Complexity: O(n)

Basic Recursive Top Down Merge Sort in CUDA

Now, let’s see how we can implement this in CUDA. It follows the same pattern as the CPU implementation. It’s my first naive implementation on CUDA. The kernel is launched for each merge operation and recursion is done on CPU.

Code: Basic Recursive Merge Sort with CUDA

Notes:

-

#include <cuda_runtime.h>provides access to the CUDA Runtime API and functions likecudaMalloc(),cudaMemcpy(),cudaFree(),kernel<<<numBlocks, threadsPerBlock>>>(args),cudaGetErrorString(),cudaGetLastError() -

__global__ void mergeSort(uint8_t* arr, uint8_t* temp, long long left, long long right)is the kernel function which is launched for each merge operation which for now does exactly the same thing as the CPU implementation - Within

void mergeSort(....)-

merge<<<1, 1>>>(...)launches the kernel for each merge operation but right now just launches a single thread to do entire merge which is not efficient.<<<1,1>>>specifies the number of thread blocks and num of threads per thread block.<<<numBlocks, blockSize>>>is the syntax for launching a kernel in CUDA. Number of threads you have in total isnumBlocks * blockSizeand they can be arranged in 1D, 2D, or 3D grid. -

cudaDeviceSynchronize()makes it wait for this merge to complete before moving onto next stage to avoid correctness issues. -

cudaMalloc(....)is used to allocate memory on GPU.cudaMemcpy(..., cudaMemcpyHostToDevice)andcudaMemcpy(...., cudaMemcpyDeviceToHost)can be used to copy data between CPU and GPU. -

cudaFree(cu_arr)is used to free the memory on GPU.

-

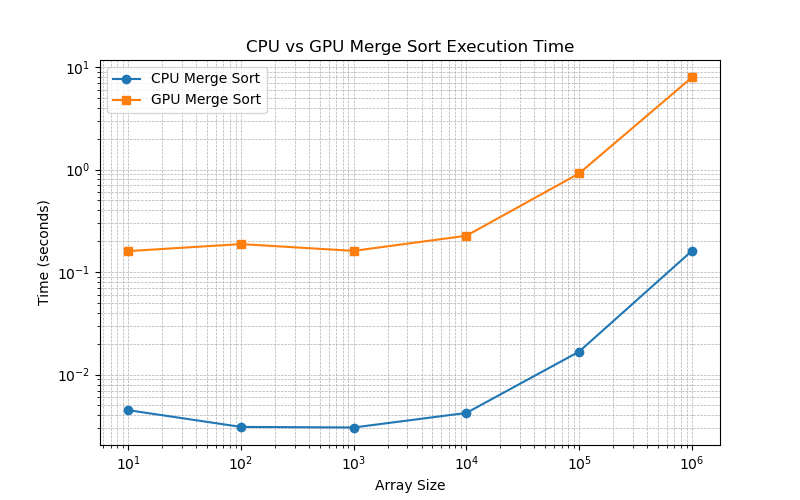

Comparision between CPU and GPU implementation of basic recursive merge sort

This implementation is not very efficient as you can see in Figure 1, the kernel is launched for each merge operation and recursion is done on CPU. CUDA does not handle recursion efficiently, so we need to flatten the recursion into a loop.

Big questions I had:

- Why does CUDA doesn’t handle recursion well?

- Our merge function is launched as a single thread on the GPU and the recursion is done on the CPU. Deep recursion is problematic as it can lead to stack overflow given the small size of threads on the GPU. There is a non trivial kernel launch overhead for each merge operation. Recursion doesn’t allow for a lot of parallelism. Syncronization is also a problem.

- How can we make things better?

- Rewrite the recursion into an iterative loop and do bottom up merge sort.

Unlike CPU implementations, where recursion is commonly used, CUDA requires an iterative approach with careful memory management and thread synchronization to achieve optimal performance as shown in implementations below.

Bottom Up Iterative Merge Sort

Since CUDA does not efficiently handle recursion due to stack limitations, we implement an iterative approach for merge sort instead. Core of the iterative approach is to merge the array in a bottom up manner. We start by merging the smallest subarrays of size 1, then merge the subarrays of size 2, then 4, 8, 16, and so on.

MERGE_SORT(arr, temp, start, end)

FOR sub_size ← 1 TO end STEP 2 × sub_size DO

FOR left ← 0 TO end STEP 2 × sub_size DO

mid ← MIN(left + sub_size - 1, end)

right ← MIN(left + 2 × sub_size - 1, end)

MERGE(arr, temp, left, mid, right)

ENDFOR

ENDFOR

END MERGE_SORT

Now let’s look at the CPU implementation which is linked below:

Code: Iterative Merge Sort on CPU

Notes:

void mergeSort(uint8_t* arr, uint8_t* temp, long long n) {

long long left, mid, right, size;

for (size = 1; size < n; size *= 2) {

for (left = 0; left < n - size; left += 2 * size) {

mid = left + size - 1;

right = std::min(left + 2 * size - 1, n - 1);

mergeKernel(arr, temp, left, mid, right);

}

}

}

We have flattened the recursion into a loop:

-

Top for loopincreases the size from1 to ninpowers of 2so we have sizes1,2,4,8. One might worry that what about arrays that don’t nicely fit as powers of 2, it was worry for me too and it’s handled nicely byclamping the right index to the end of the array. -

Inner for loopgoes over the array in steps of 2*size and merges the subarrays of sizesizestarting fromlefttorightandmidis the middle of the subarray. Noticeright = std::min(left + 2 * size - 1, n - 1);which clamps the right index to the end of the array. -

mergeKernelis the same asmergefunction in the recursive approach but now it’s called in a loop.

Bottom Up Iterative Merge Sort in CUDA

Major takeaway for me personally come from this implementation. In the above implementation there are two loops, so my thought was to do second loop in parallel on GPU which mainly does the merge operations in parallel for the entire array.

void mergeSort(uint8_t* arr, uint8_t* temp, long long n) {

bool flipflop = true;

long long numThreads, gridSize;

long long size; // size means the merge arrays sizes

for (size = 1; size < n; size *= 2) {

numThreads = max(n / (2 * size), (long long)1);

gridSize = (numThreads + THREADS_PER_BLOCK - 1) / THREADS_PER_BLOCK;

mergeKernel<<<gridSize, THREADS_PER_BLOCK>>>(flipflop ? arr : temp, flipflop ? temp : arr, size, n);

CUDA_CHECK(cudaGetLastError());

CUDA_CHECK(cudaDeviceSynchronize());

flipflop = !flipflop;

}

if (!flipflop) CUDA_CHECK(cudaMemcpy(arr, temp, n * sizeof(uint8_t), cudaMemcpyDeviceToDevice));

}

Notes:

-

flipflopis used to keep track of which array is the final sorted array and which is the scratch space. -

numThreadsis the number of threads we need to launch for the merge operation.gridSizeis the number of blocks we need to launch. - After size of the merge arrays is calculated:

- Given the size of the merge arrays, merge happens on two subarrays of size

size. Hence I need to launchn / (2 * size)threads(that 1 helps in case where size becomes bigger n/2). -

gridSizeis calculated by dividing the number of threads byTHREADS_PER_BLOCKand rounding up. Grid size is the number of blocks we need to launch. - In mergeKernel we specify gridSize and THREADS_PER_BLOCK to launch the kernel.

mergeKernel<<<gridSize, THREADS_PER_BLOCK>>>(flipflop ? arr : temp, flipflop ? temp : arr, size, n);notice the ternary operator to switch between the arrays where arr and temp serve asping-pong bufferswhere based on state of flipflop we read from one and write to another. -

CUDA_CHECK(cudaGetLastError());andCUDA_CHECK(cudaDeviceSynchronize());are used to check for errors and to make sure the kernel has finished executing before moving on to the next stage.

- Given the size of the merge arrays, merge happens on two subarrays of size

-

if (!flipflop) CUDA_CHECK(cudaMemcpy(arr, temp, n * sizeof(uint8_t), cudaMemcpyDeviceToDevice));is used to copy the final sorted array back to the original array if the final sorted array is in the temp array.

Now let’s look at the mergeKernel which is shown:

__global__ void mergeKernel(uint8_t* arr, uint8_t* temp, long long curr_size, long long n) {

long long index = blockIdx.x * blockDim.x + threadIdx.x;

long long left = 2 * curr_size * index;

if (left >= n) return;

long long mid = min(left + curr_size - 1, n - 1);

long long right = min(left + 2 * curr_size - 1, n - 1);

long long i = left, j = mid + 1, k = left;

///.... below is the good old merge logic

}

Notes:

- It took me a ton of time to understand the indexing and how to launch the kernel for the merge operation properly. I launch 1d grids and 1d blocks.

blockIdx.xgives the block index which could be 1,2,3,4,… and thenblockDim.xspecifies the number of threads in a block.blockIdx.x * blockDim.xafter we add itthreadIdx.x(0 to THREADS_PER_BLOCK-1) gives the index of the thread globally where each index is unique. - Now we are able to uniquely identify our thread globally, we want to give it a

unique subproblemdepending on thisindex. We now shift our focus on calculating theleft,mid, andrightindices for the subarray we want to merge. We have a array of size n, each thread is supposed to deal with subproblems of size2 * curr_sizestarting fromlefttoright. -

Most Important question: how many indexes I have? index=blockIdx.x * blockDim.x + threadIdx.xand if the blockIdx.x is 0 and threadIdx.x is 0, we have minimum index as 0. We know max blockIdx.x is gridSize-1. So the maximum index is(gridSize-1) * blockDim.x + blockDim.x - 1creatinggridSize * blockDim.x -1as max. If we replace gridSize withnumThreads + THREADS_PER_BLOCK -1 / THREADS_PER_BLOCKand blockDim.x withTHREADS_PER_BLOCK, we getnumThreads + THREADS_PER_BLOCK - 2. So the maximum index is approximatelyn / 2 × curr_sizeto cater to our subproblems with some extra threads. - Given we have indexes from 0 to

n/2 * curr_size, we can calculate theleft indexas2 * curr_size * indexwhich roughly covers all of the array. If we haveleft >= nwe return as we have covered the whole array. There was an interesting edge case where this left was previously anintleading to overflow and I had to change it tolong long, I found that error withcompute-sanitizerand using debug symbols by using-g -Gwhile compiling with nvcc. - After we find

left,mid,right, it’s the same old merge logic. - I tried with different values of

THREADS_PER_BLOCKbut results were almost the same.

Results

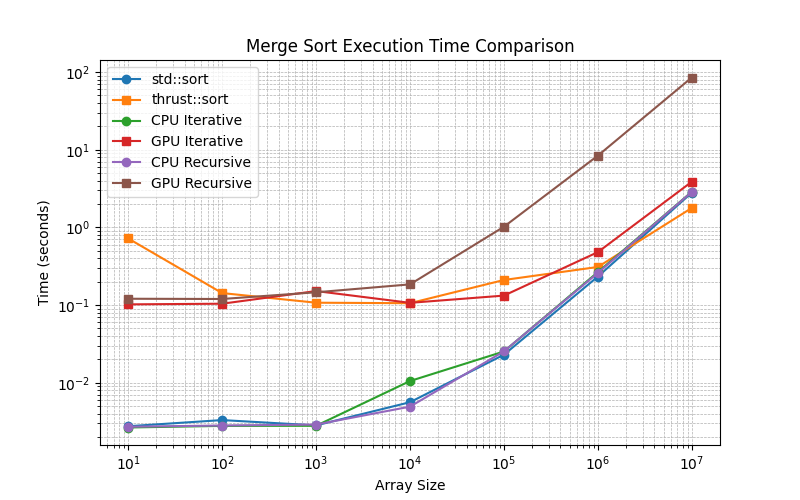

We defined the task of generating the random arrays on CPU, doing sorting on CPU/GPU and then comparing with the standard sorting method std::sort on CPU.

- Bottom-up iterative merge sort in CUDA significantly improves efficiency by parallelizing merge operations

- It’s not unexpected to see that

CPU approachesdo better for smaller arrays for the overall wall clock times of the program. -

thrust::sortcomes out to be better than my implementations for larger arrays whereGPU iterative methodisreasonably competitivewhile recursive approach is way behind. -

CPUapproaches i.e. recursive and iterative are very competitive withstd::sort - 10^7 is the tipping point where the GPU sort with

thrust::sortbeats the standard sorting on CPU and my implementationGPU iterativecomes very close too. - The overhead for sending the data to the GPU and bringing back data from GPU is likely the cause for the time difference between CPU and GPU approaches for size 10^1 to 10^4.

Conclusion and Future Work

I learned a lot about merge sort which had elluded me for a very long time. This simple algorithm also gave a very nice opportunity to learn the basics of CUDA with medium difficulty. There are several things that I could have done better and I would like to explore in the future:

- Define the tasks better or more tasks where goals could be:

- Things need to start from CPU and endup on the GPU only for further computation or vice versa

- Things need to start and end on the individual devices only.

- Things need to start on GPU and go to CPU for further computation.

- Attempt implementing

Parallel Merge Sortas suggested byProf RezaulatSBUin references [1] - Compare way bigger arrays going from

10^7 to 10^18andstress testinghow much sorting we can do on either of the devices. - Optimize further for performance on GPU by using

shared memory, usingthrust:sortatspecific levelin combination with my implementation like we do long multiplication in the karatsuba algorithm for size of n < 20 as I was taught inCSE 201byProf Sesh. - Utilize the threads in CPU implementations to see if that can be useful for improving performance.

- Compare the effects of using different sizes of

THREAD_PER_BLOCKand maybe rather than each thread solving 1 subproblems,each threads solve more than 1 subproblemsgiven we are waiting till all threads finish.

References:

Additional Resources:

Enjoy Reading This Article?

Here are some more articles you might like to read next: